Fueled by the late 2022 launch of ChatGPT, generative AI has cemented itself on the map as arguably the key trend that will shape the next 10 years of technology adoption. After the product’s initial hype and continued, explosive growth, though, public sentiment began to shift and existential questions about how large language models (LLMs) are trained and deployed – and whether due credit is always provided.

A diverse and wide swath of content creators and the organizations representing them, including The New York Times Company, the Authors Guild, Getty Images and Sarah Silverman, have filed suit against AI firms over the past 12 months. While the specifics of each case differ, they are all fundamentally based on a lack of attribution and/or remuneration for their proprietary work, which was allegedly used to train LLMs. And this is to say nothing of the growing volume of legal cases in which generative AI systems are being used to inform research, which has resulted in a growing number of citations of fake cases in legal briefs.

The common thread among each of these issues? The lack of transparency into how generative AI systems are trained, who accessed the training data and when is exposing organizations to massive liability, while often taking proprietary IP without paying due credit.

It’s thus with good reason that businesses are erring on the side of caution when it comes to integrating AI capabilities. Generative AI models work at a mindboggling speed, and consume enormous amounts of data at once. Operating at this pace and with a general lack of oversight can lead to discriminatory biases, hallucinations and flawed insights. It’s no reason that AI is still seen as a black box – and why there’s such a pressing need for more established guardrails for generative AI systems. Realizing this technology’s immense potential is about more than innovation: it requires a renewed approach to vetting, processing and, in many cases, protecting the information feeding LLMs.

A novel approach to increase AI transparency

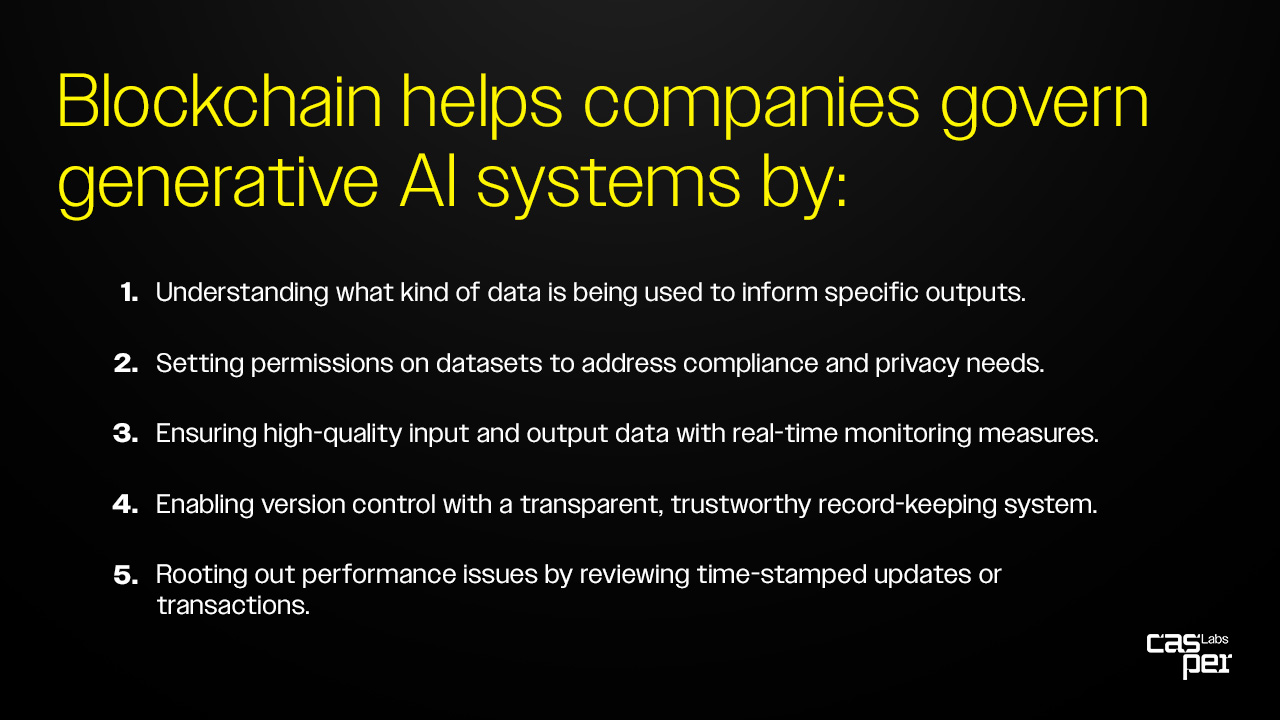

For AI systems to operate effectively and within designated parameters, businesses need a secure, transparent and scalable data infrastructure that enables highly serialized record-keeping. These are the key factors to enabling a healthy AI governance model.

Blockchain technology is uniquely suited to serve these needs. At its core, it provides a ledger that allows users to monitor the quality of data inputs for AI models, while also clearly logging any attempts to tamper with said data.

It’s for this reason that the team at Casper Labs is working closely with IBM Consulting to develop an AI governance tool to help clients gain greater auditability and visibility into how their AI systems work.

The cutting-edge blockchain-powered product will initially be built on the IBM watsonx.governance platform to help companies exercise better control over their AI. From vetting the datasets used to train their models, to time-stamping system updates and participant contributions, the governance solution will unlock a secure record of an AI model’s comprehensive development journey. Now businesses can open a window into how their AI models are adapting over many cycles of training, evaluation, and adjustment.

Bringing critical visibility to generative AI systems

During The Hub at Davos in January, Casper Labs CEO Mrinal Manohar presented a product demo with Shyam Nagarajan—IBM’s blockchain and responsible AI development expert—to illustrate how our solution can arm companies with an easy, efficient way to audit their AI operations.

In the demo, an insurance agent uses a third-party AI chatbot to generate quotes for customers. When it begins to yield inaccurate outputs, the chatbot company leverages blockchain’s immutable digital ledger to “change the context back” to when the AI model was operating smoothly. As long as the insurance agent can provide a specific session number (i.e. the identifier located in the top right corner of each chat), the company can trace back to a moment in time before the model began to malfunction.

“Blockchain solves the AI governance puzzle. It not only brings trust, auditability, and transparency, it also enables workflows between multiple organizations,” said IBM Consulting Executive Director Shyam Nagarajyan during the demonstration. “These are absolutely critical [for all AI], but especially for the generative AI software supply chain model.”

If your organization is currently assessing your generative AI governance process and you’d like to be among the first beta users of the solution being jointly developed by Casper Labs and IBM, you can apply for our early adopter program here.